from keras.models import load_model

from keras.models import Sequential

from keras.layers import (

Input,

TextVectorization,

Dense,

Dropout,

Activation,

Flatten,

Embedding,

Bidirectional,

SimpleRNN,

GRU,

LSTM,

)

from keras.callbacks import TensorBoard, EarlyStopping

from keras.metrics import AUC

# Model constants.

model_name = "bidirectional_lstm_with_return_sequences_on_embedded"

max_features = 10000

sequence_length = 30

embedding_dim = 100

rnn_units = 100

results_data_path = os.path.join("..", "results")

model_file_path = os.path.join(results_data_path, model_name)

if os.path.exists(model_file_path):

# Load model

model = load_model(model_file_path)

else:

# Define vectorizer

vectorize_layer = TextVectorization(

output_mode="int",

max_tokens=max_features,

output_sequence_length=sequence_length,

)

vectorize_layer.adapt(

df.text,

batch_size=128,

)

# define NN model

model = Sequential(name=model_name)

model.add(Input(shape=(1,), dtype=tf.string))

model.add(vectorize_layer)

# Embedding layer

model.add(

Embedding(

max_features,

embedding_dim,

input_length=sequence_length,

)

)

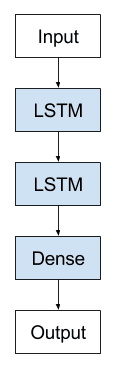

# Bidirectional LSTM layer

model.add(Bidirectional(LSTM(units=rnn_units, dropout=0.2, return_sequences=True)))

model.add(Bidirectional(LSTM(units=rnn_units, dropout=0.2)))

# Dense layers

model.add(Dense(100, input_shape=(max_features,), activation="relu"))

model.add(Dropout(0.2))

model.add(Dense(10, activation="relu"))

# Classification layer

model.add(Dense(1, activation="sigmoid"))

# compile NN network

model.compile(

loss="binary_crossentropy",

optimizer="adam",

metrics=[

"accuracy",

AUC(curve="ROC", name="ROC_AUC"),

AUC(curve="PR", name="AP"),

],

)

# fit NN model

model.fit(

X_train,

y_train,

epochs=10,

batch_size=128,

validation_split=0.2,

callbacks=[

TensorBoard(log_dir=f"logs/{model.name}"),

EarlyStopping(monitor="val_loss", patience=2),

],

workers=4,

use_multiprocessing=True,

)

model.save(model_file_path)

print(model.summary())